Threat intelligence: OTX, Bro, SiLK, BIND RPZ, OSSEC

Building a toolbox around threat intelligence can be done with freely available tools. Shared information about malicious behaviour allows you to detect and sometimes prevent activity from – and to – Internet resources that could compromise your systems’ security.

I’ve already described how to use lists of malicious domain names in a BIND RPZ (Response Policy Zone). Adding an information feed like AlienVault OTX (Open Threat Exchange) to the mix further extends the awareness and detection capabilities.

AlienVault is probably most known for their SIEM (Security Information and Event Management) named Unified Security Management™, with a scaled-down open source version named Open Source Security Information and Event Management (OSSIM). They also provide a platform for sharing threat intelligence, namely Open Threat Exchange (OTX). OTX is based on registered users sharing security information, for instance domains and hostnames involved in phishing scams, IP addresses performing brute force SSH login attempts, etc. The information is divided into so-called pulses, each pulse a set of information items considered part of the same malicious activity. For example, a pulse can contain URLs to a site spreading drive-by malware, the IP addresses of their C&C, along with hashes of the files. By selecting which pulses and/or users to subscribe to, the registered information in each pulse will be available through a feed from their API.

Carefully reviewing which users/pulses to subscribe to – there’s always a risk of false positives – I’m now regularly receiving an updated feed. This feed is parsed and currently split into two files: One RPZ file containing hostnames and domains for use with BIND, and one file containing IP addresses for use with SiLK.

As explained in an earlier post, OSSEC will let me know if someone (or something) makes DNS requests for a domain or hostname registered as malicious. Extending this to include the DNS records obtained from OTX was simply a matter of defining a new RPZ in BIND. Depending on how this is used (block? redirect? alert?), a whitelist should be in place to prevent accidental blocking of known good domains. One pulse describes all the Internet resources a client infected by a certain exploit will contact, including some certificate authorities which are not necessarily considered evil.

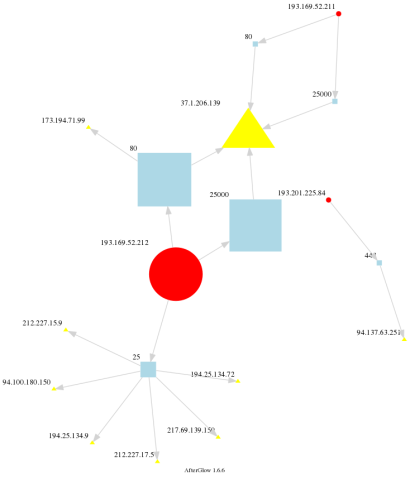

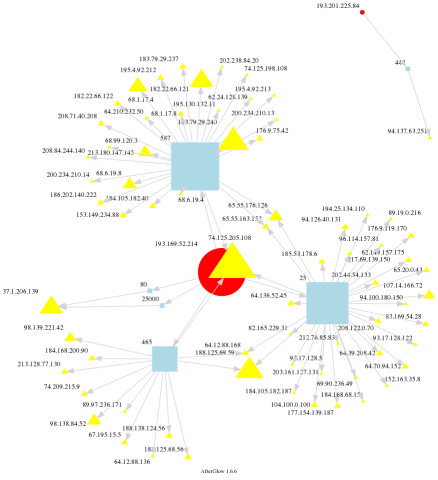

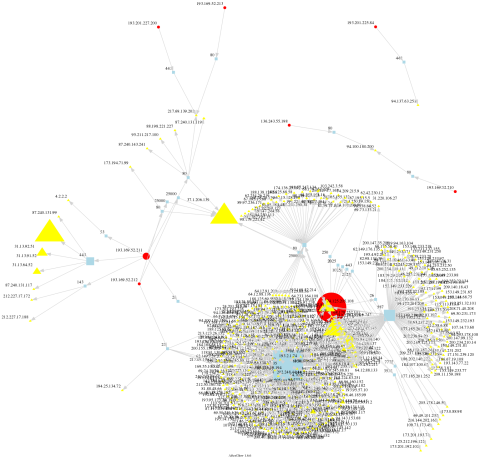

The file with IP addresses can be used directly with a firewall, by logging or even blocking or throttling traffic to/from the IP addresses in question. For rear-view mirror analysis it can be used with SiLK, to find out if there has been any network traffic to or from any of these addresses. To do this, you will first have to create an IP set with the command rwsetbuild:

# rwsetbuild /some/path/ip-otx.txt /some/path/ip-otx.set

Now we can use this set file in our queries. For this query I’ve manually selected just a few inbound matches:

# rwfilter --proto=0-255 --start-date=2016/01/01 \

--sipset=/some/path/ip-otx.set --type=all \

--pass=stdout | rwcut -f 1-5

sIP| dIP|sPort|dPort|pro|

185.94.111.1| my.ip.network|60264| 53| 17|

216.243.31.2| my.ip.network|33091| 80| 6|

71.6.135.131| my.ip.network|63604| 993| 6|

71.6.135.131| my.ip.network|60633| 993| 6|

71.6.135.131| my.ip.network|60888| 993| 6|

71.6.135.131| my.ip.network|32985| 993| 6|

71.6.135.131| my.ip.network|33060| 993| 6|

71.6.135.131| my.ip.network|33089| 993| 6|

71.6.135.131| my.ip.network|33103| 993| 6|

71.6.135.131| my.ip.network|33165| 993| 6|

71.6.135.131| my.ip.network|33185| 993| 6|

71.6.135.131| my.ip.network|33614| 993| 6|

71.6.135.131| my.ip.network|33750| 993| 6|

71.6.135.131| my.ip.network|60330| 993| 6|

185.130.5.224| my.ip.network|60000| 80| 6|

185.130.5.224| my.ip.network|60000| 80| 6|

198.20.99.130| my.ip.network| 0| 0| 1|

185.130.5.201| my.ip.network|43176| 53| 17|

129.82.138.44| my.ip.network| 0| 0| 1|

185.130.5.224| my.ip.network|60000| 80| 6|

185.130.5.224| my.ip.network|60000| 80| 6|

When you need more details about the listed address or other indicators, OTX provides a search form to find the pulse(s) in which the indicator was registered.

OTX can be used with Bro as well, and there are at least two Bro scripts for updating the feeds from the OTX API. The one that works for me is https://github.com/hosom/bro-otx. The script will make Bro register activity that matches indicators from an OTX pulse.

Sample log entries, modified for readability:

my.ip.network 59541 some.dns.ip 53 - - - union83939k.wordpress.com

Intel::DOMAIN DNS::IN_REQUEST

my.ip.network 40453 54.183.130.144 80 - - - ow.ly

Intel::DOMAIN HTTP::IN_HOST_HEADER

74.82.47.54 47235 my.ip.network 80 - - - 74.82.47.54

Intel::ADDRConn::IN_ORIG

This article mentions just a few components that can be combined. Obviously there’s a lot of possibilities for integrating and interfacing between different systems. There are several companies that provide threat intelligence feeds, some for free and some for paying customers. Depending on the product(s), a SIEM would be able to combine and correlate the different kinds of threat intelligence to detected events.